AI, Human Expertise, and the Theory to Demonstrated Results.

Introduction

AI, a Catalyst for Innovation: we are currently in the midst of a profound shift in the innovation landscape, a transformation largely driven by the power of AI. This technology, with its ability to process vast amounts of information, generate ideas, and solve problems at unprecedented speeds, is reshaping the way we innovate.

This transformation is not merely changing how we innovate; it fundamentally challenges our understanding of expertise, the value of traditional credentials, and how we validate new ideas.

The New Innovation Landscape

The Democratisation of Tools and Knowledge

The rise of open-source projects, cloud computing, and AI tools created a more level playing field.

Individuals and small teams can now compete with established players, and they can experience the excitement of accessing resources once exclusive to well-funded "walled and moated" institutions.

Example: In 2019, a small team from DeepMind developed AlphaFold, solving the 50-year-old protein folding problem. Similarly, Omega*'s open-source components (like Hospital AI simulacra) and the DBZ Comfort Index (CI) enable a global community to contribute to its development.

We apply this object-oriented process to quantum outward computing optimisation and complex systems modelling. This democratisation fosters an environment where innovation is accessible and accelerated by collective expertise.

AI as an Innovation Catalyst

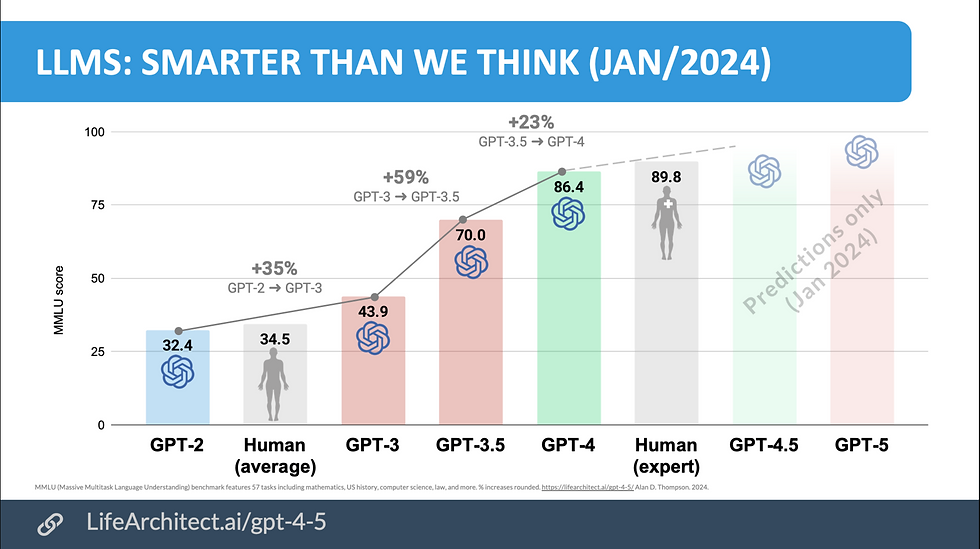

AI's Impact on Innovation: AI has emerged as a powerful force multiplier for innovation. Its ability to process vast amounts of information, generate ideas, and solve problems at unprecedented speeds is evident in recent benchmarks. These benchmarks provide compelling evidence of AI's role in accelerating the innovation process.

Detailed comparison showing GPT -4's performance in various tests compared to human averages, highlighting the breadth of AI capabilities across different domains.

Comparison Aspect, Benchmark AI Model Performance and Human Performance Difference Implications

Surpassing Human Experts MMLU Claude 3.5 Sonnet: 90.4 Human Expert: 89.8 +0.6 (0.67%) AI models now exceed human expert performance in complex tasks.

Wide Performance Gap GPQA Claude 3 Opus: 59.5 PhD Holder: 34.0 +25.5 (75%) AI demonstrates a significant advantage in problem-solving abilities.

Consistency Across Tasks Multiple Benchmarks Top AI Models: 85-90+ Various Human Experts: 30-50 Up to 60 points (120%) AI consistently performs consistently across varied cognitive tasks.

Rapid AI Advancement Development Timeline Multiple AI Models Exceeding Human Traditional Human Training N/A AI models are developed and iterated rapidly, outpacing the slower traditional human expertise development.

These results show that AI models match and surpass human expert performance in various cognitive tasks. This positions AI as a pivotal player in the innovation ecosystem, enabling breakthroughs at speeds and scales previously unimaginable.

The Challenge to Traditional Timelines

The traditional multi-year PhD process and academic timelines need to be in sync with the rapid pace of technological advancement. For instance, mapping the Human Genome can now be accomplished in weeks or months thanks to significant AI and software development using AI innovations, rather than taking years. The evidence clearly shows that a BSc student does not need to spend five years completing just one Protein fold map during a PhD program following a Master's degree.

Case Study: OpenAI's DALL-E 2, a text-to-image AI model, took approximately nine months from concept to public release. From the breakthrough date of the 26th of November 2023 with our implementation of Q* lead to the supporting function requirements of K* Knowledge and O* the Observation function from calculus to code in four month. Integrating the Rutherford Quantum Constant (RQC) into Omega* took only two months, significantly enhancing its quantum computing capabilities.

These rapid timelines starkly contrast with traditional research cycles, often spanning several years, highlighting the need for faster, more agile approaches in today's innovation landscape.

Source LifeArchitects: Progression of AI models' scores over time, showing rapid improvement and surpassing human averages in various benchmarks.

The Human Factor: Bias and Resistance

Personal Motivations and Conflicts of Interest

Venture capitalists, academics with patent portfolios, and established tech companies often have vested interests that can influence their evaluation of new technologies. Resistance against genuinely disruptive innovations challenging the status quo has shareholders to appease—moving the status quo goes hand in hand with the term moving at "glacial speed."

Example: Omega* has to demonstrate its ability to solve NP-hard problems more efficiently than established algorithms. It has to face scepticism from academic institutions with investments in traditional methods.

Omega* will overcome this by providing verifiable, reproducible results through Wolfram Alpha procedural code. Although not open source the objective is to set a standard for transparency and challenge entrenched biases in AI development.

The Dunning-Kruger Effect, Expert Bias and affects of PhD "Publish or Perish" Culture

Accusations of the Dunning-Kruger effect are sometimes used to dismiss novel ideas. However, this can also be a defence mechanism employed by established experts who feel threatened by paradigm-shifting concepts. Understandable when your world focuses around the formalisation under an academic regime.

Historical Parallel: When Alfred Wegener proposed the theory of continental drift in 1912, the geological establishment dismissed him. It took decades to accept plate tectonics, demonstrating how expert bias can hinder revolutionary ideas. The recent AI benchmark results compel us to reconsider our notions of expertise and the value of traditional credentials in light of demonstrable AI capabilities.

Comparison of quality and empathy ratings for chatbot and physician responses, showing significant advantages for AI in both areas.

AI vs. Human Evaluation: A Data-Driven Comparison

The benchmark data reveals significant discrepancies between AI and human expert performance:

Surpassing Human Experts:

Benchmark: Massive Multi-task Language Understanding (MMLU)

AI Model Performance: Claude 3.5 Sonnet achieved a score of 90.4.

Human Performance: Human experts scored 89.8.

Difference: +0.6 points (0.67% higher for AI)

Implications: AI models have reached a point where they can outperform human experts even in sophisticated cognitive tasks, demonstrating their potential as equal or superior collaborators in innovation.

Wide Performance Gap:

Benchmark: General Problem Solving and Question Answering (GPQA)

AI Model Performance: Claude 3 Opus scored 59.5.

Human Performance: A PhD holder scored 34.0.

Difference: +25.5 points (75% higher for AI)

Implications: The large gap between AI performance and PhD holders underscores the profound impact of AI in problem-solving domains, where AI's ability to process and analyse information rapidly provides a distinct advantage.

Consistency Across Tasks:

Benchmark: Various Cognitive Tasks and Benchmarks

AI Model Performance: Top AI models consistently score between 85-90+.

Human Performance: Human experts score between 30-50 depending on the task.

Difference: Up to 60 points (120% higher for AI)

Implications: AI models exhibit exceptional versatility, consistently ranking at the top across different cognitive benchmarks. This consistency highlights AI's broad applicability and ability to handle diverse tasks efficiently.

Rapid AI Advancement:

Development Timeline: AI models like Claude and GPT-4 have been iteratively developed in a matter of months.

Human Expertise Development: Traditional academic and training paths for achieving similar levels of expertise take years, such as the multi-year PhD process.

Implications: AI models' rapid development and iteration contrast sharply with human expertise's slower, traditional development. This acceleration in AI capabilities necessitates reevaluating how we perceive and leverage expertise in innovation.

GPT-4's superior performance in soft skills compared to Humans.

The Power of Demonstrated Results

In this complex landscape of rapid innovation, personal biases, and institutional resistance, empirical evidence and demonstrated results emerge as the ultimate arbiters of value.

Working Code as Proof of Concept

Just as Ernest Rutherford revolutionised our understanding of atomic structure through experimental evidence, today's innovators can leverage working code and tangible outputs as proof of concept.

Case Study: When Satoshi Nakamoto introduced Bitcoin in 2008, the working code accompanying the whitepaper was crucial in demonstrating the feasibility of a decentralised digital currency. Similarly, AI benchmark results provide irrefutable evidence of their capabilities, challenging traditional notions of expertise and innovation.

Why Demonstrated Results Matter

Tangible Outcomes: Working solutions and benchmark results provide measurable, verifiable evidence of capabilities.

Rapid Iteration: Functional prototypes and AI models allow quick refinement and improvement.

Real-world Application: Demonstrated results bridge the gap between theory and practice.

Overcoming Bias: Functional solutions and benchmark performances can overcome personal biases by demonstrating value regardless of origin.

Accessible Validation: A global community of peers can share, validate, and build upon demonstrated results.

Navigating the New Paradigm

Frontier innovation, is exemplified by systems like Omega* and the impressive performance of AI models. But we must challenge our assumptions and embrace new validation methods:

Embrace Hybrid Evaluation: Combine AI-driven analysis with human expertise for comprehensive evaluation, recognising the strengths of both.

Value Demonstrated Results: Prioritise working prototypes, empirical evidence, and benchmark performances over theoretical arguments or traditional credentials.

Foster Interdisciplinary Collaboration: Encourage cross-pollination of ideas across different fields, leveraging AI's broad knowledge base alongside human specialisation.

Recognise and Mitigate Biases: Be aware of personal and institutional biases that might hinder recognising genuinely innovative ideas or capabilities.

Adapt Evaluation Timelines: Align assessment processes with the rapid pace of modern innovation and AI development.

Encourage Responsible Innovation: Balance rapid development and AI deployment with careful consideration of ethical implications and long-term impacts.

Conclusion

The future of innovation lies not in clinging to traditional timelines, institutional authority, or outdated notions of expertise but in our ability to rapidly prototype, test, and refine ideas in the real world.

The benchmark data clearly shows that AI models are theoretical constructs and practical tools capable of matching and exceeding human expert performance in complex cognitive tasks.

Fostering an environment that values empirical evidence over established hierarchies can create a more dynamic, inclusive, and practical innovation ecosystem.

The most successful innovators will be those who can navigate the complex interplay of AI augmentation and human creativity.

Again, this process will be about demonstrating the value of their ideas through tangible results. Having a baseline will overcome scepticism, and the power of working solutions seems logical.

As we propose and embrace this new paradigm, we open ourselves to possibilities where groundbreaking innovations can come from unexpected sources. Ideas are judged on their merits rather than their pedigree. Feasibility, Viability, and Desirability are either friction points or easily interchangeable when any proposition is tabled. These fundamental elements determine the delivery pace and level of experience.

The innovation revolution is here. AI LLM models are "facilities" past tools. Systems like Omega* were designed openly to this potential by augmenting LLM as model-less instructors.

The question is not whether we will participate but how quickly we can adapt to and shape this new reality. The future belongs to those who can envision and demonstrate it, leveraging the power of AI while complementing it with uniquely human insights and ethical considerations.